New America on Twitter

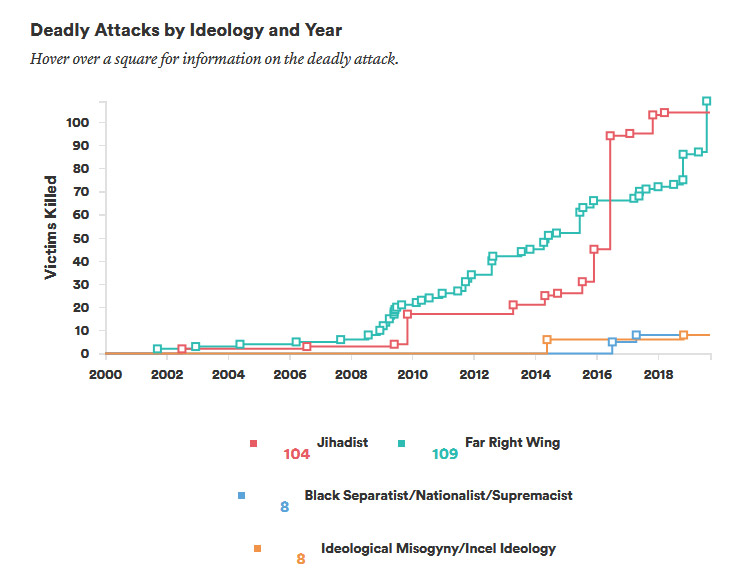

"Though there are many ideological strands, and attackers' ideological reference points are often in flux or complex, one particular ideological strand - white supremacy - stands out as a particular danger." -From @peterbergencnn's @HomelandDems testimony today

Part IV. What is the Threat to the United States Today?

The Terrorist Threat is From Across the Political Spectrum

The main terrorist threat today in the United States is best understood as emerging from across the political spectrum, as ubiquitous firearms, political polarization, and other factors have combined with the power of online communication and social media to generate a complex and varied terrorist threat that crosses ideologies and is largely disconnected from traditional understandings of terrorist organizations.

No jihadist foreign terrorist organization has directed a deadly attack inside the United States since 9/11, and no deadly jihadist attacker has received training or support from groups abroad. In the almost 18 years after 9/11, jihadists have killed 104 people inside the United States. This death toll is virtually the same as that from far-right terrorism (consisting of anti-government, white supremacist, and anti-abortion violence), which has killed 109 people. The United States has also seen attacks in recent years inspired by ideological misogyny and black separatist/nationalist ideology. Individuals motivated by these ideologies have killed eight people each. America's terrorism problem today is homegrown and is not the province of any one group or ideological perspective.

FSA's Machine Learning Hurts Low-Income Student Enrollment

Automated over-selection of low-income students for FAFSA verification is problematic

By Alejandra Acosta

Aug. 20, 2019

This month, many low-income students who planned to enroll in college will not show up to their first day of class. A problematic algorithm that flagged them for FAFSA verification, a tedious process where students have to prove their family income (and other elements) in order to receive their financial aid, may be to blame.

FAFSA verification overwhelmingly affects low-income students. A whopping 98% of all students selected for verification in the 2015-2016 financial aid year were Pell-eligible students. About half of all Pell Grant applicants were selected for verification, and of those, one in four did not complete the process. And, only 56% of Pell applicants selected for verification received their Pell Grant, which means that millions of low-income students did not receive funding essential for them to enroll in higher education. FAFSA verification clearly creates major obstacles for too many low-income students.

In 2018, FSA announced that it implemented machine learning in its verification process to address a surge in the overall number of students selected for verification. The hope was that machine learning would help FSA better target fewer students for verification. But considering what we know about the current reality of verification and the shortcomings of data, algorithms, and machine learning, the implementation of this tool is very concerning.

A recent OIG report concluded that it is unclear that FSA's verification process effectively identified FAFSAs with errors. OIG found that FSA "did not evaluate its process for selecting FAFSA data elements to verify," meaning that it did not test whether elements such as household income and number of family members actually result in improper payments. FSA outsourced the creation of their algorithm to an aerospace and defense corporation (who then subcontracted the task to a consulting company) and did not evaluate whether this algorithm worked effectively before putting it to use. Implementing machine learning will continue to perpetuate the outcomes of this problematic algorithm.

Machine learning algorithms "predict the future" based on the past- they identify trends based on statistical correlations in a data set. Since the algorithm already almost exclusively identifies Pell-eligible students for verification, a machine learning model will only continue this trend. A decrease in the overall number of students selected for verification will likely come at the continued expense of low-income students if the original statistical model is not changed.

FSA needed to walk before it ran- it should have put more care into its statistical model that flagged students for verification before using machine learning to let a computer run with a problematic algorithm. What is unfortunate is that FSA's assumption that its algorithm worked well not only put millions of students through hell, but it still did not prevent financial aid from being disbursed incorrectly- over $790 million in improper Pell Grant payments were still made in fiscal year 2017.

Verifying students' family financial picture is warranted (we don't want folks gaming the system in yet another way), but an algorithm that disproportionately puts vulnerable students through an arduous process needs to have a second look before being automated. FSA should follow OIG's recommendations of evaluating whether its data elements selected for verification are effective and evaluate the work of its contractor (which it did not agree to do in response to OIG's report). FSA should also follow some of New America's recommendations for institutions when selecting a predictive analytics vendor, such as asking how effective the model is or for a disparate impact analysis.

As more higher education institutions and organizations implement the use of big data, predictive analytics, and machine learning, it is essential that they pause to think critically about its goals for using these tools, whether there is truly a need, and what the impact and risk are from using these tools. When used ethically, equitably, and with care, predictive analytics and machine learning can help us do our jobs better. But if we don't walk before we run, our intended positive impact could actually have negative consequences, like deterring millions of low-income students from enrolling in higher education.

Emphasizing the need to be careful with how we administer FAFSA verification, listen to this example of a student's story about her frustrations and setbacks that can result during the college admissions process: